Event-Driven Architectures with Kafka and Kotlin on Upstash

Welcome to yet another episode in my series on Event-Driven Architectures. This is the third part of my series.

So far we had:

- Event-Driven Architectures with Kafka and Java Spring-Boot + Revision 1

- Event-Driven Architectures with Kafka and Python + Revision 1

This article is about how to realise an Event-Driven Architecture with Kotlin, but with a few differences compared to the previous articles:

- As mentioned before we will use Kotlin instead of Java.

- Instead of Maven we will use Gradle for dependency management and building our application.

- The project has been created with IntelliJs built-in Spring Initializr and not the web-based one.

- One project / service is being used for the producer and the consumer. It doesn’t really make a difference for this tutorial and is easier to handle.

- Instead of using a local Kafka instance started via docker-compose, an external service called Upstash will provide all Kafka related infrastructure.

Upstash is a true-serverless offering for Kafka and Redis that provides an on-demand, pay-as-you-go solution without having to fiddle around with things like hardware, virtual machines or docker containers.

I have written an introductory post on Upstash already, called “First Steps with Upstash for Kafka.”

https://twissmueller.medium.com/first-steps-with-upstash-for-kafka-6d4d023da590

Introduction #

Event-driven architectures have become the thing over the last years with Kafka being the de-facto standard when it comes to tooling.

This post provides a complete example for an event-driven architecture, implemented with a Kotlin Spring-Boot service that communicates via a Kafka-cluster on Upstash.

The main goal for this tutorial has been to provide a working example without getting too much into any details, which, in my opinion, unnecessarily distract from the main task of getting “something” up and running as quick as possible.

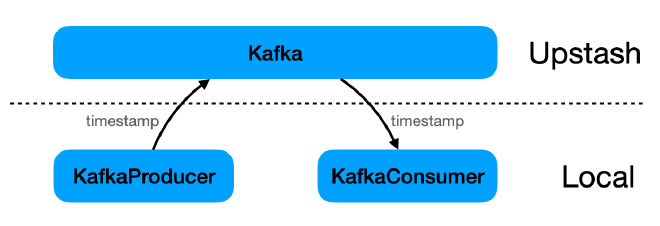

We have a couple of building blocks, mainly

- Infrastructure (Kafka on Upstash)

- Producer (Kotlin, Spring-Boot)

- Consumer (Kotlin, Spring-Boot)

The producer has the only task of periodically sending out an event to Kafka. This event just carries a timestamp. The consumers job is to listen for this event and print the timestamp.

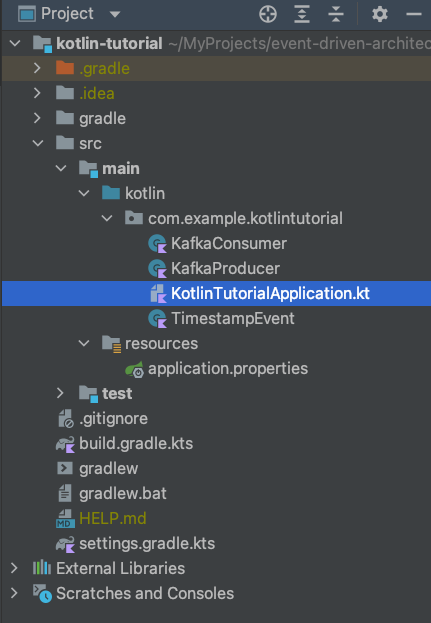

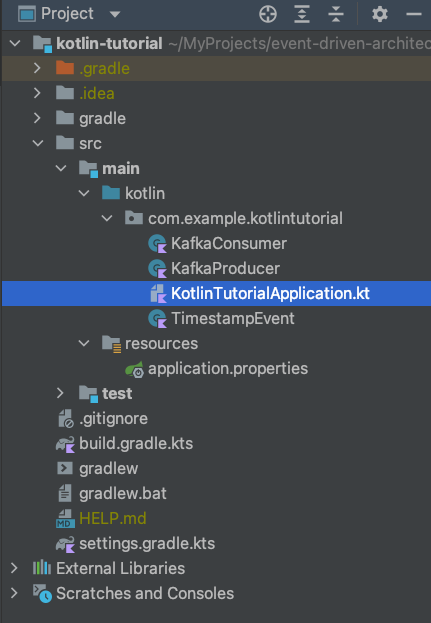

The whole implementation resulted in the following project structure:

The complete code can be downloaded from here.

This can be build directly on the command line or imported into an IDE like IntelliJ for example.

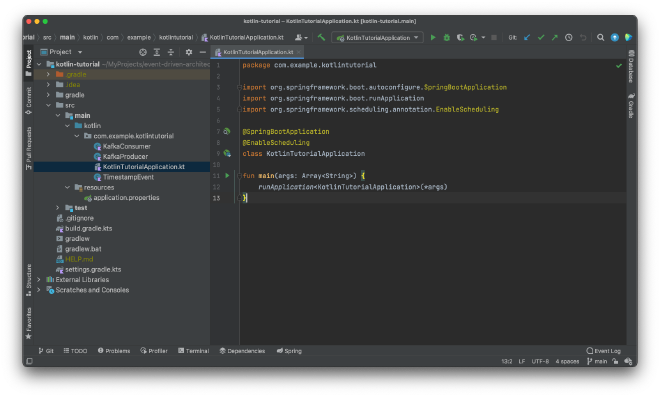

Code Setup #

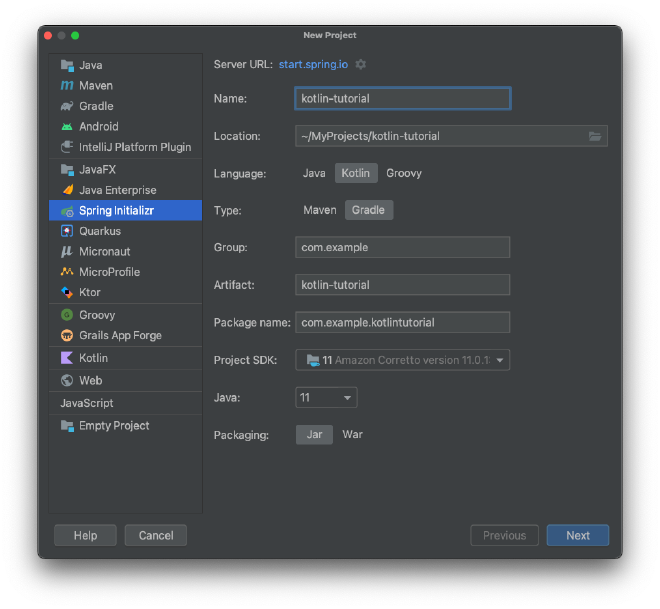

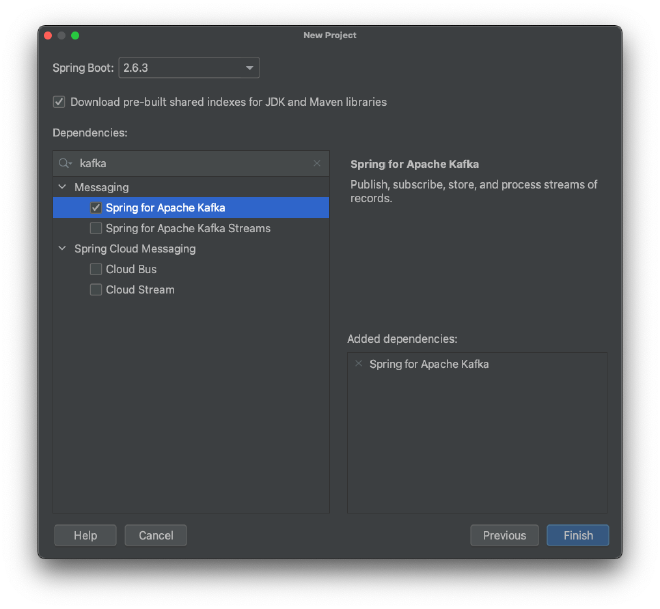

Users of IntelliJs Ultimate Edition can directly use the Spring Initializr from the IDEs “New Project”-dialog. If it is not available then you can always create the project over at https://start.spring.io. Then download and unpack the ZIP and import everything into IntelliJ or any other IDE, editor or what you prefer.

First, I have created a new project that uses Kotlin as its language and Gradle for dependency and build mangement.

In the next step I have just added one dependency for Spring. The rest I have added manually later.

After adding the files for the producer and the consumer, the project looked like this:

What goes into those files is explained in the next chapters.

Producer #

As mentioned above, the producer is “producing” events that hold a timestamp and sending them out via Kafka to everyone who is interested in receiving them.

Events in Kafka are basically a key-value-pair. For those, we need to define a serialiser for when we produce and a deserialiser for consuming them. I have done this in the application.properties.

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.springframework.kafka.support.serializer.JsonSerializer

The key is a simple String, whereas the value needs to be JSON.

The consumer has one method for sending out the event which will be triggered every 5 seconds. The @Scheduled- annotation makes it possible to accomplish this in a an easy way.

First, a timestamp is being created and then it is being “sent out” to the “tutorial-topic”.

@Component

class KafkaProducer(private val kafkaTemplate: KafkaTemplate<String, TimestampEvent>) {

@Scheduled(fixedRate = 5000)

fun send() {

val event = TimestampEvent(timestamp = ZonedDateTime.now())

kafkaTemplate.send("tutorial-topic", event)

println("Sent: ${event.timestamp.toString()}")

}

}

That is all for the producer. On to the consumer …

Consumer #

It is time to receive the events with the timestamps that have been sent out by the producer.

Again, we start with a bit of configuration. Similar to the producer, we need to specify what data-types are being used in the events for the key and the value.

The consumer also needs to specify the consumer group it belongs to.

Then we need to tell Kafka what to do when there is no initial offset in Kafka or if the current offset does not exist anymore. With auto-offset-reset=earliest we tell the system to use the earliest offset it can find.

We also need to provide a comma-delimited list of packages which are allowed to be deserialised with the property spring.kafka.consumer.properties.spring.json.trusted.packages.

Here is the complete configuration in the application.properties:

spring.kafka.consumer.key-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.consumer.value-deserializer=org.springframework.kafka.support.serializer.JsonDeserializer

spring.kafka.consumer.group-id=tutorial-group

spring.kafka.consumer.auto-offset-reset=earliest

spring.kafka.consumer.properties.spring.json.trusted.packages=com.example.kotlintutorial

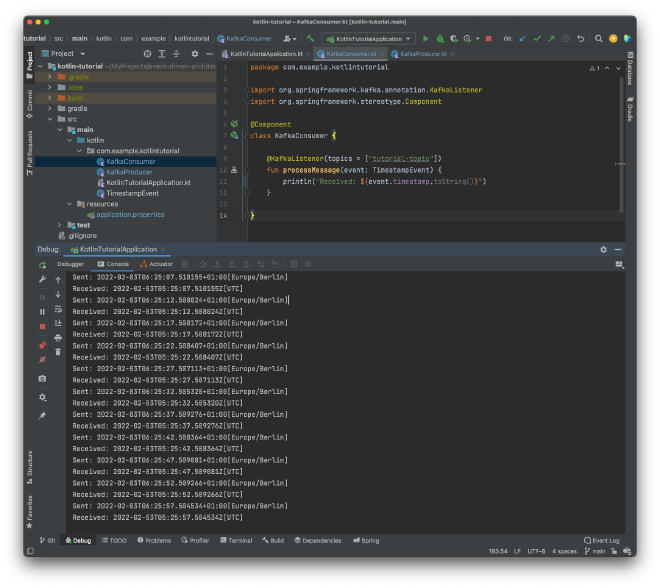

Last but not least, we have the consumer in KafkaConsumer.kt. We only have to specify a listener on a topic by using the @KafkaListenertopic and the action. In this case, the timestamp just gets logged.

@Component

class KafkaConsumer {

@KafkaListener(topics = ["tutorial-topic"])

fun processMessage(event: TimestampEvent) {

println("Received: ${event.timestamp.toString()}")

}

}

Now, that we have all the code in place we are only missing our infrastructure that will provide our Kafka cluster.

Infrastructure #

As mentioned in the introduction, Upstash will provide the Kafka cluster.

In case you don’t have an account with a running cluster there, please see this article for the first steps.

https://twissmueller.medium.com/first-steps-with-upstash-for-kafka-6d4d023da590

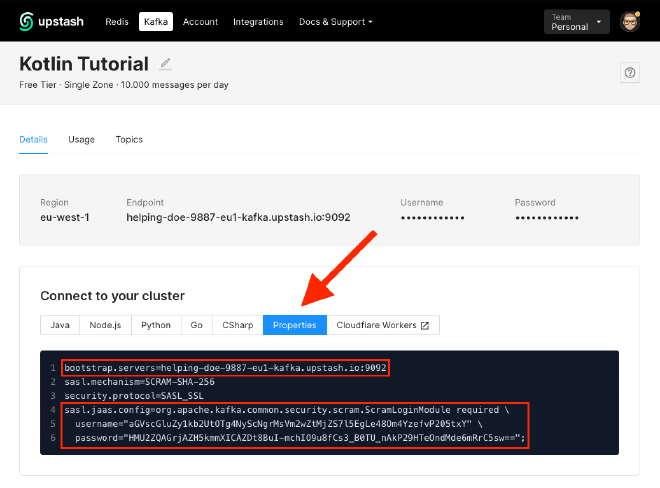

In comparison to the Java tutorial, I have put the whole configuration into the properties

When your cluster is in place, you need to change two properties in the application.properties.

First, the application needs to know the address of the cluster. This will be provided in spring.kafka.bootstrap-servers.

spring.kafka.bootstrap-servers=CHANGE_ME

Then a couple of security related properties need to be set. The only one that has to be changed is

spring.kafka.properties.sasl.jaas.config.

spring.kafka.properties.security.protocol=SASL_SSL

spring.kafka.properties.sasl.mechanism=SCRAM-SHA-256

spring.kafka.properties.sasl.jaas.config=CHANGE_ME

The values for bootstrap.servers and sasl.jass.config will be created dynamically for you and can just be copied over from your Upstash-console. Don’t copy what is below, because this exact configuration belonged to my cluster that I have created for this tutorial and has been deleted right after I was done.

Run the Example Code #

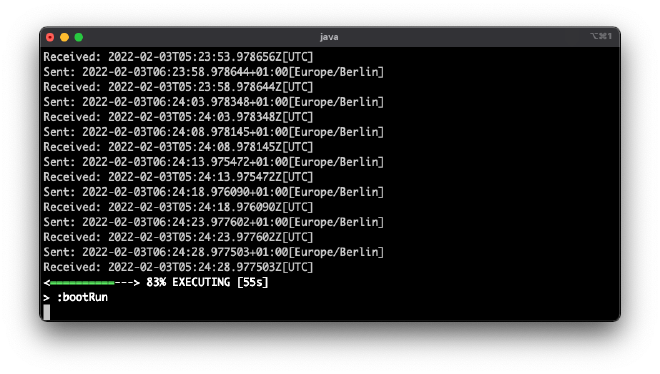

It is time to run everything. First, make sure your cluster on Upstash is running.

Either run the project directly from the IDE or from the terminal with the command

./gradlew bootRun

Now, you should be able to see something similar like this from the terminal.

The IDEs console output looks like:

Conclusion #

This concludes this introductory-tutorial on how to create an event-driven architecture by using Kafka and Kotlin on Upstash.

You now know how to

- create a cluster

- send events with a producer

- receive events with a consumer

- do all this in Kotlin

Thank you for reading!

- If you enjoyed this, please follow me on Medium

- Buy me a coffee to keep me going

- Support me and other Medium writers by signing up here

https://twissmueller.medium.com/membership

This post contains affiliate links and has been sponsored by Upstash.