Ensuring Data Privacy with Memphis Functions - Part 1

A Primer on Stations and Functions #

As a mobile developer who also works on server backends and cloud infrastructure with AWS, I always wish for a simple and visually appealing cloud service that allows me to create data pipelines quickly and easily.

In general, I find it easier to have a backend that contains most of the business logic, while keeping the mobile app as simple as possible. This way, when the business logic changes, I can simply redeploy my own backend code instead of having to release a new app version, which takes more time.

I am also a big fan of structuring or defining use cases as pipelines. This involves having a defined input and output, and then plugging in the different steps that make up your business flow.

While AWS is the dominant player in the market, I often find it complicated to use their web UI, especially when I need to quickly try out things during the prototyping phase without relying on the AWS SDK or similar tools. I simply want to click around and explore. However, I am not entirely satisfied with the existing solutions available on the market, as I am still searching for a simple and visually appealing solution that is easy to use.

I may have just found a solution with memphis.dev, which has recently released their “Functions” offering. With Memphis, you can create a “station”, which can be compared to a Kafka topic in my opinion, and easily define a data pipeline using a visual approach. Theses stations also have producers and consumers.

However, the important part for me is that it is possible to manipulate the data being produced and received by the station in various ways before the consumers can receive the messages. Memphis already provides a variety of useful functions that can be customized based on your specific use case. Alternatively, you can define your own functions in a GitHub repository that you own and link it to your Memphis account.

Let’s imagine a fictional scenario: A person arrives at a hospital for treatment and goes through the registration process. As part of this process, the person is required to provide personal data, which will be utilized throughout the hospital.

While certain data may be relevant for certain departments within the hospital, other data may be completely irrelevant. For instance, the food preparation staff does not need to know the patients’ social security numbers, and the billing department does not need to know their dietary preferences. In fact, it is not just a matter of them not caring about the data, but they may also be legally prohibited from processing and storing such data under the GDPR law. Therefore, it is necessary to appropriately scrub the data.

Additionally, we will cover the use case of enriching incoming data with new data that is not created or sent by the producers. For example, imagine a mobile app where a required feature has not been implemented. Instead of having to re-release the app, we can simply solve the problem by adding a function to our pipeline.

In this blog, I will create a pipeline that enriches incoming data with a timestamp and removes another data field before consumption. I will perform the following steps in detail:

- Create a client user

- Create a station

- Create a Java Web Token

- Produce a message

- Consume a message

- Attach a function for adding a timestamp

- Attach a function for removing a data field

With that, we will have defined a simple pipeline. This pipeline takes an input message from a producer and distributes it to any consumer who has subscribed to the station.

In a second post, we will continue by introducing private functions to implement our own business logic. These functions are hosted in the developer’s own GitHub repository. Additionally, we will also take a look at how to ensure schema consistency across our services.

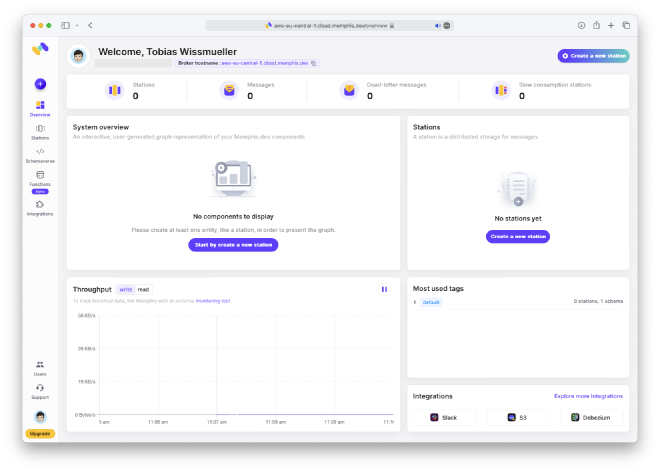

After creating an account, we begin with an empty Overview.

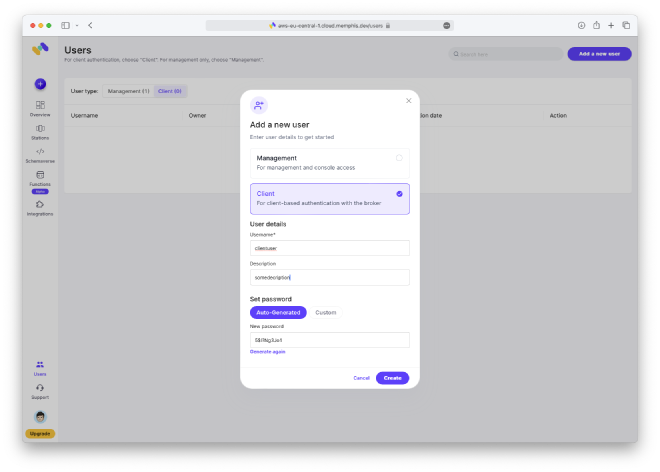

Create Client User #

To begin, we will create a client user by following these steps: click on “Users → Client → Add a new user”. Make sure to write down the password, as it will be required later.

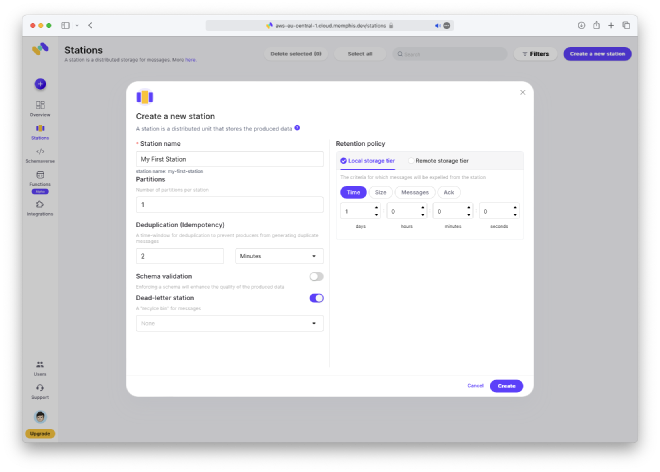

Create Station #

Next, we create the main part, which is the station. If you want to learn more about what a station is, you can read up on it by following this link: Memphis Station Documentation. If you have previous experience with Kafka, this concept will be familiar to you.

Click on “Stations → Create a new station”. Simply enter the name and leave all other fields unchanged.

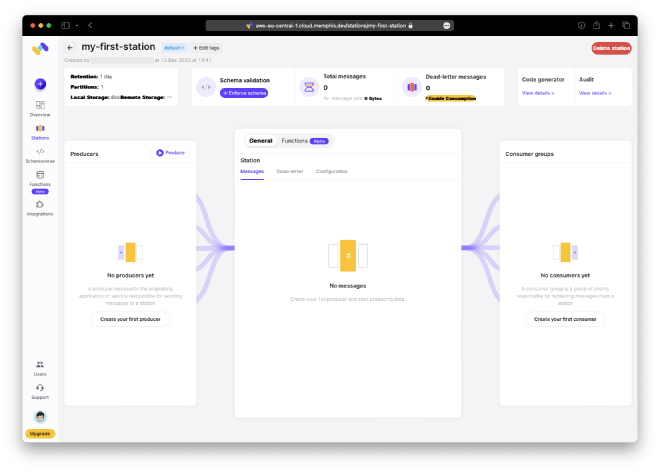

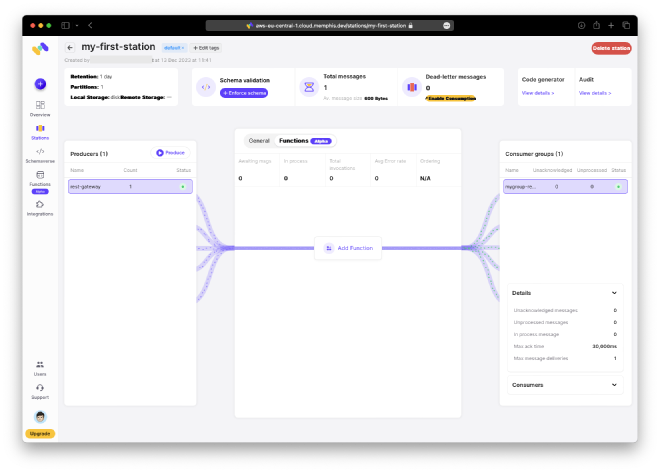

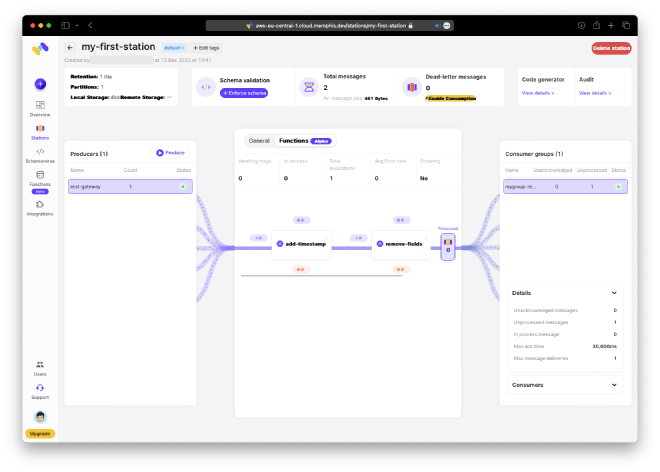

Now, we can see our pipeline. The input is on the left, the station is in the middle, and the output is on the right. The station will host our functions, but more details on that will be provided later.

Create Java Web Token #

To produce and consume messages, we require a Java Web Token for authentication.

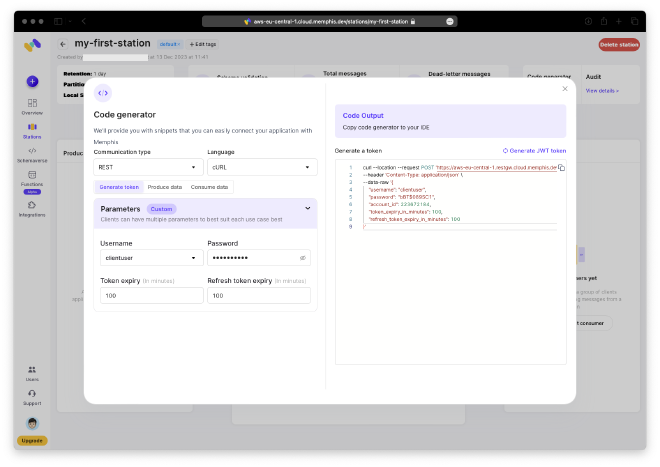

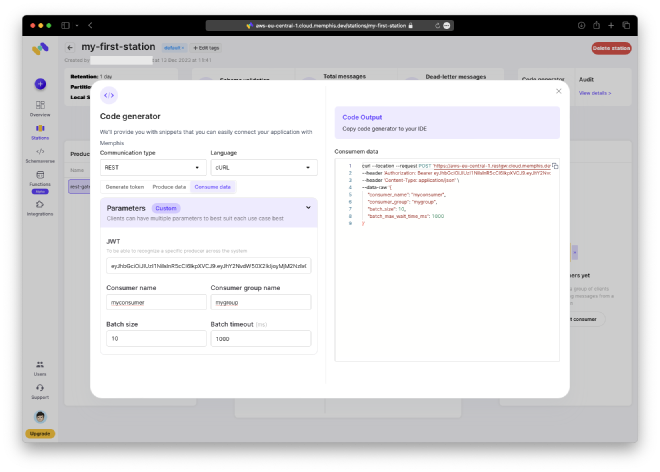

Now, let’s discuss something about their web UI that, in my opinion, could be optimized. There is a convenient feature called the “Code generator” located in the top right corner. Since it is very useful, I would suggest placing it in a more prominent position. This is just my preference. Perhaps later, when everything is in place, it may no longer be needed, and that would make sense to have it there.

Anyway, let’s create some REST calls that will fulfill our requirements. We simply need a simple cURL statement that can be executed in the terminal. We only need to provide a user and their corresponding password.

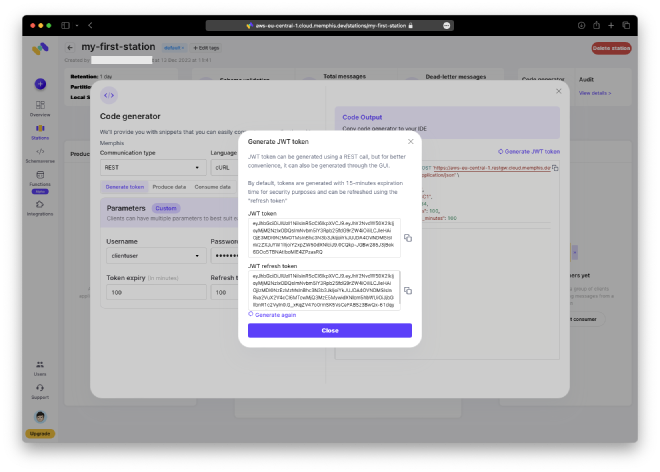

Next, click on “Generate JWT token” to generate the token. You can then copy and paste both the token and the refresh token to a secure location for future use.

To use the curl command, simply copy it and paste it into your terminal, then execute it.

% curl --location --request POST 'https://aws-eu-central-1.restgw.cloud.memphis.dev/auth/authenticate' \

--header 'Content-Type: application/json' \

--data-raw '{

"username": "clientuser",

"password": "bBT$089SC1",

"account_id": 223672184,

"token_expiry_in_minutes": 100,

"refresh_token_expiry_in_minutes": 100

}'

{"expires_in":102148303560000,"jwt":"eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJhY2NvdW50X2lkIjoyMjM2NzIxODQsImNvbm5lY3Rpb25fdG9rZW4iOiIiLCJleHAiOjE3MDI0NzE3MjYsInBhc3N3b3JkIjoiYkJUJDA4OVNDMSIsInVzZXJuYW1lIjoiY2xpZW50dXNlciJ9.vPHuPLqYzWMlfvlgClQdog5QPjuXIbppXIIBx0Few4c","jwt_refresh_token":"eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJhY2NvdW50X2lkIjoyMjM2NzIxODQsImNvbm5lY3Rpb25fdG9rZW4iOiIiLCJleHAiOjE3MDI0NzE3MjYsInBhc3N3b3JkIjoiYkJUJDA4OVNDMSIsInRva2VuX2V4cCI6MTcwMjQ3MTcyNiwidXNlcm5hbWUiOiJjbGllbnR1c2VyIn0.nQuuplxoTqyzUxVjQ7Dk2uzDBBadGpqaXrl5zTnVtfc","refresh_token_expires_in":102148303560000}%

Produce Message #

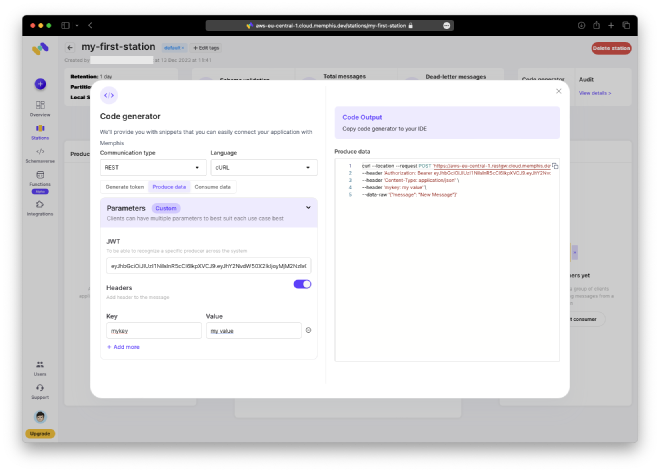

Now it’s time to generate our first message. Open the code generator again and switch to the “Produce data” tab.

Once you have finished, copy and paste the command into your terminal and execute it.

% curl --location --request POST 'https://aws-eu-central-1.restgw.cloud.memphis.dev/stations/my-first-station/produce/single' \

--header 'Authorization: Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJhY2NvdW50X2lkIjoyMjM2NzIxODQsImNvbm5lY3Rpb25fdG9rZW4iOiIiLCJleHAiOjE3MDI0NzMxOTMsInBhc3N3b3JkIjoiYkJUJDA4OVNDMSIsInVzZXJuYW1lIjoiY2xpZW50dXNlciJ9.0CQkp-JGBw285J3jBok6GOo5TBNAtIboMlE4ZPzasRQ' \

--header 'Content-Type: application/json' \

--header 'mykey: my value' \

--data-raw '{"message": "New Message"}'

{"error":null,"success":true}%

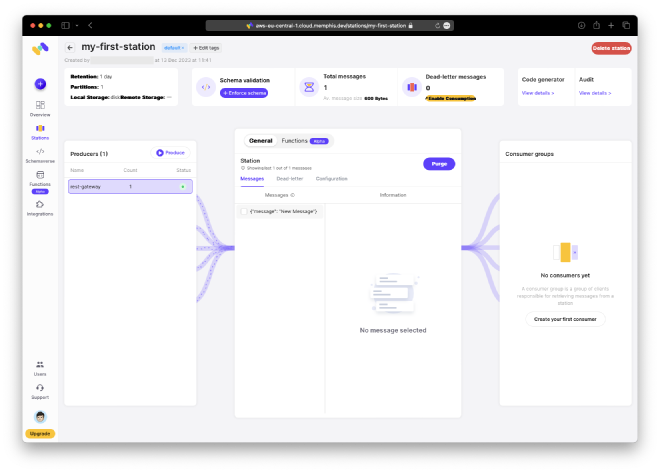

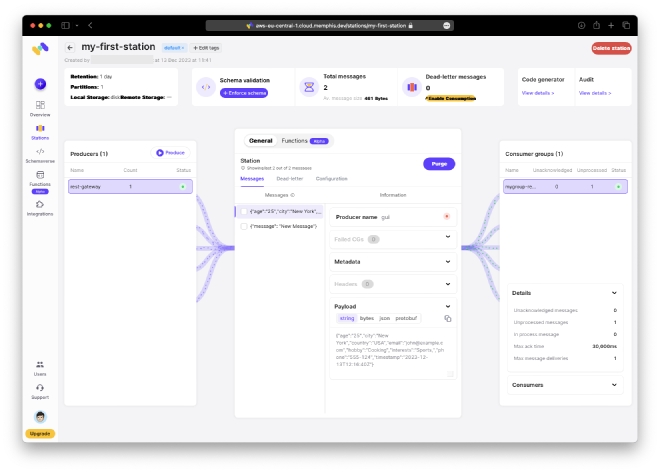

Let’s do a quick check: We can now view the received message within the station. By clicking on it, we can access all the details.

Consume Message #

The final step in our pipeline is to consume the message. Follow the same process as before: open the code generator, copy and paste the command, and execute it.

As a result, we receive the message that we had previously generated. It is important to note that this message remains unchanged, and no data has been altered.

% curl --location --request POST 'https://aws-eu-central-1.restgw.cloud.memphis.dev/stations/my-first-station/consume/batch' \

--header 'Authorization: Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJhY2NvdW50X2lkIjoyMjM2NzIxODQsImNvbm5lY3Rpb25fdG9rZW4iOiIiLCJleHAiOjE3MDI0NzMxOTMsInBhc3N3b3JkIjoiYkJUJDA4OVNDMSIsInVzZXJuYW1lIjoiY2xpZW50dXNlciJ9.0CQkp-JGBw285J3jBok6GOo5TBNAtIboMlE4ZPzasRQ' \

--header 'Content-Type: application/json' \

--data-raw '{

"consumer_name": "myconsumer",

"consumer_group": "mygroup",

"batch_size": 10,

"batch_max_wait_time_ms": 1000

}'

[{"message":"{\"message\": \"New Message\"}","headers":{"Accept":"*/*","Authorization":"Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJhY2NvdW50X2lkIjoyMjM2NzIxODQsImNvbm5lY3Rpb25fdG9rZW4iOiIiLCJleHAiOjE3MDI0NzMxOTMsInBhc3N3b3JkIjoiYkJUJDA4OVNDMSIsInVzZXJuYW1lIjoiY2xpZW50dXNlciJ9.0CQkp-JGBw285J3jBok6GOo5TBNAtIboMlE4ZPzasRQ","Content-Length":"26","Content-Type":"application/json","Host":"aws-eu-central-1.restgw.cloud.memphis.dev","Mykey":"my value","User-Agent":"curl/8.1.2"}}]%

Add Timestamp Function #

The fun part begins now. I mean, it’s nice to produce and consume messages, but what I’m really interested in is adding some business logic to transform the messages according to my use case.

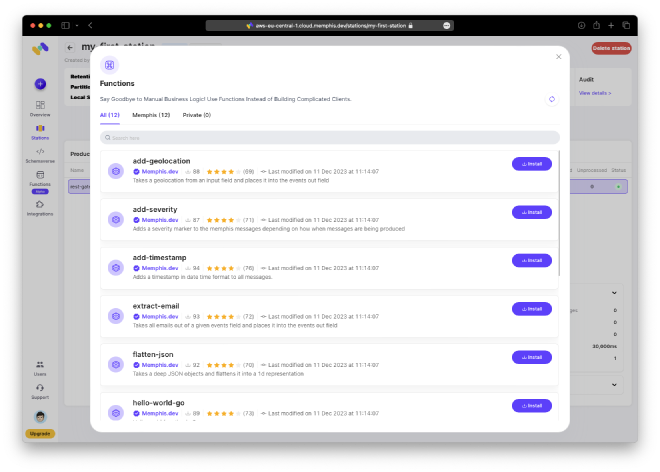

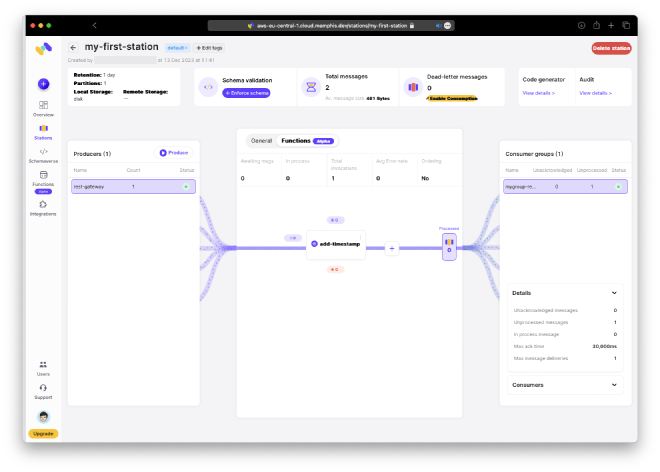

Inside your station click on “Functions → Add function”.

Then install “add-timestamp”.

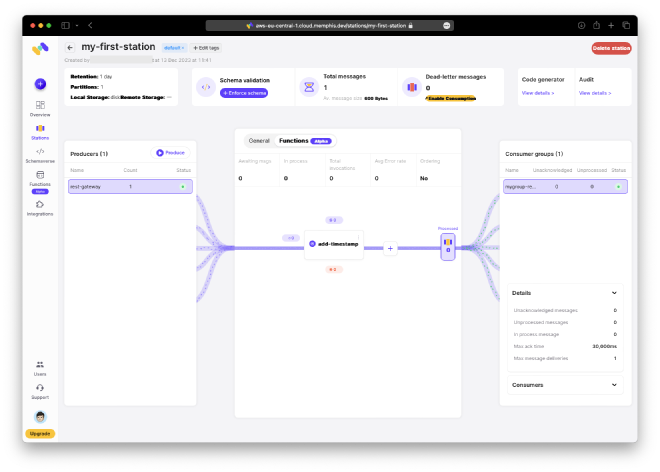

When it has been installed you can first test the function and then attach it to your station.

Let’s test our pipeline. Any message I produce will now have an additional field with a timestamp.

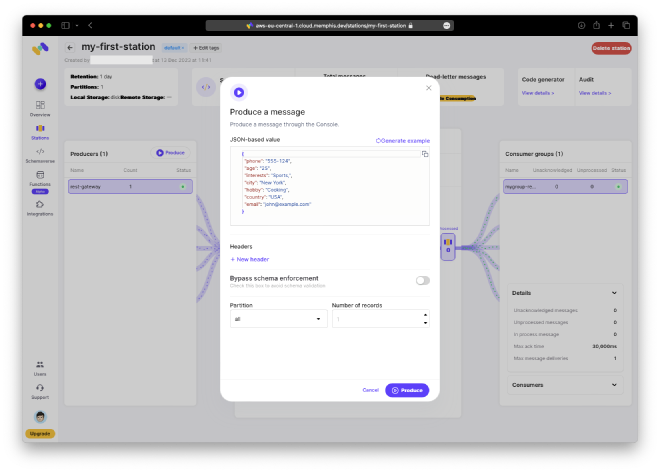

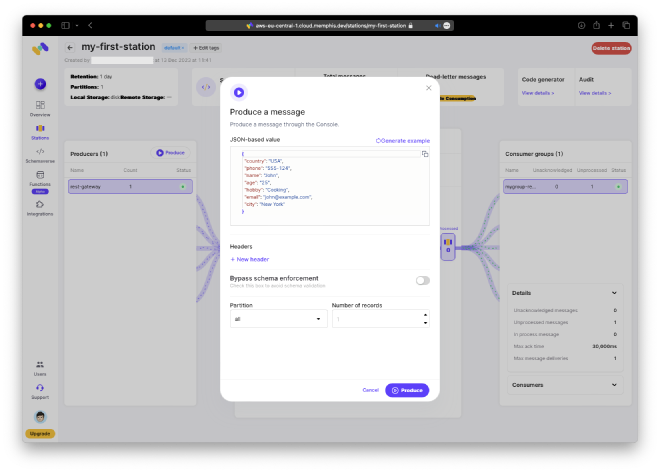

This time, I am producing using the web UI to demonstrate this option as well.

Now, our received message includes a timestamp when checked in our station.

Remove Data Function #

Let’s introduce another function. This time, we will add a function for scrubbing data. As mentioned in the introduction, this function may be a crucial requirement for software that deals with personal information, as it is mandated by local GDPR laws to ensure strict data handling. In Germany we have some of the strictest regulations worldwide when it comes to personally identifiable information (PII). Designing your application to comply with the GDPR law right from the start is of utmost importance. The ability to easily incorporate a data-scrubbing mechanism provides great flexibility and saves significant time and costs.

But this is just one useful example of what the Memphis function can offer. I often encounter situations where pseudo-anonymization is required. This involves replacing certain data with a key and storing the key separately along with the replaced field. When reading the original record, the reader will only see the key and not the PII. Another option is to create a simple function that replaces the content with a generated key, for instance.

In this tutorial, however, we will focus on data scrubbing and utilize the ready-made function. Perhaps I will test Memphis private functions with the idea of writing a function to demonstrate the use case of pseudo-anonymization.

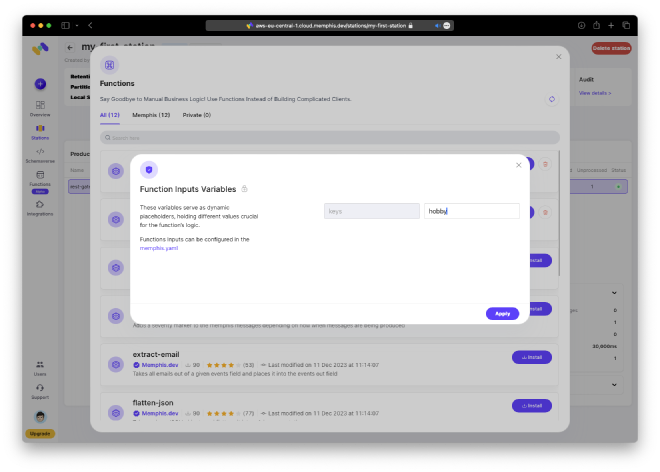

Under “Function,” click the “+” button to add a new function that will be executed after the “add-timestamp” function.

To install and attach the “remove-fields” function, we need to add a parameter to the function. In this case, the parameter is the name of the field that will be removed. Let’s remove the “hobby” field. We chose this field because the code generator generates a template message with this field already included.

Of course, this depends on your custom message. In our fictive scenario, this could be the social security number or the dietary preferences, for example.

After we are done, we can now see the second function in our station.

Let’s test again by generating a new message.

And check if we

- still have the timestamp

- but no hobby

Looks good!

Conclusion #

This was a crash course on data pipelining with Memphis Functions. This brand new feature already offers real benefits for developers, allowing us to be more efficient and save money in the process. Although there are some issues with the web UI not functioning as expected, I am confident that the Memphis team will address them soon.

The core offering seems to be reliable and worth further exploration. As mentioned earlier, you have the option to use private functions hosted in your own Github repository. This allows you to easily make changes to the function locally and push updates to Github to modify your pipeline.

In the upcoming second part, we will delve deeper into Memphis private functions. First, we will create a GitHub repository to host your private functions. In the second step, I will show you how to link it with your Memphis account. Lastly, we will implement our custom function and make it live.

We will also take a look at what Memphis refers to as the “Schemaverse”. This is their solution for providing a service that ensures schema consistency across your services.

Please share your thoughts on this and the idea of the follow-up post by leaving a comment below or sending me a direct message.